In AI competitions similar to the ones you can find on CodinGame, rarely are the participants limited by their programming skills – you don’t need to have 10 years of coding experience to reimplement an A* algorithm or to build a working simulation.

The key differences that separate the coders at the top of the ladder are their approaches, different scoring strategies or their completely different learning models.

Picking the correct tools for the job is the most important part of such competition. Like the Pareto principle says, investing 20% of your time on a good design of a learning program will contribute to 80% of your success (or failure, for that matter).

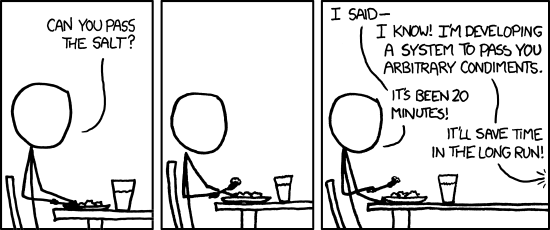

Investing time on a blueprint will usually pay off, since having a clear goal in mind while writing code can save you from blankly staring at the screen and wandering off with your thoughts into the realms of “TODO.”

The more carefully thought out your program is, the more you can constrain the learning task, thus saving time in the long run. Just don’t forget to actually implement your ideas once in a while 😉

The Training Experience

Feedback

What is feedback and why is it important for your AI?

Feedback is the information that your AI will receive about its performance. Positive feedback shows that the AI is on the right track, while negative feedback aims to discourage the AI from doing anything that leads to a bad outcome. The feedback to your AI will be provided by a so-called experiment generator.

The experiment generator is simply a program that can provide both tasks for your AI, and either feedback about how the AI performed on those tasks or what the AI should have done. Let’s take tic-tac-toe, for example. First, your experiment generator provides a board state to your AI:

O’s turn in a game of tic-tac-toe

Then it either scores a move based on how good it is (a positive score for placing an O on the 4th tile, a negative score for all other moves) or simply provides “4” as the correct solution.

With a true learning program in mind, you have to start by thinking about how you plan on training it. But what does “training a learning program” even mean?

Learning

A program is said to learn if its performance on a task improves with experience. The “training” simply consists of providing the program with enough relevant data for it to learn from. There are, of course, multiple types of training, with the most preferred one being direct training.

Direct training is a form of training in which your experiment generator will provide problems and optimal solutions to them, from which your system can then learn. This form of training is extremely effective, but rarely feasible, since the experiment generator has to come up with the correct move or solution to any problem that it creates.

- Imperfect information is one roadblock that can stop you from using direct training. You cannot guarantee an answer when analyzing games with imperfect information or games that involve randomness.

(for example the fog of war in Codebusters) - Incomplete information is a similar problem, which means that the program does not know what kind of opponent it is up against or what priorities the opponent has. A suboptimal move might be the winning move against some types of opponents and/or their strategies; however, the system will have no way of differentiating between the two opponents.

(in Legends of Code and Magic, for example, you don’t know the deck cards of your opponent) - The last and most common brick wall that people run into while trying to use direct training is the complexity of the task. More often than not, the task is so complex that brute-forcing all solutions just to find the optimal one is simply not possible.

A*Craft – There are 6.48*10^110 possible arrow placement variations in this level

An alternative to direct learning is indirect learning. This form of learning uses only the outcome or score of a game to infer the correctness of each specific move. Using this type of training is a lot harder due to the fact that the system has to determine which moves were correct, despite losing and vice versa. This is why direct feedback is typically a lot easier to train with and yields more successful results.

Training Data Control

The experiment generator is also responsible for the control of the training data. It may have full control and generate the training examples. It may also allow the learning system to explore different states on its own, merely providing the correct moves and solutions for the training.

In other situations, the experiment generator may have no control whatsoever over what training data the system gets to work with. This is often the case with genetic algorithms (GA). The whole training process lets the fittest of the population dictate what line of play is the most promising.

A well made GA can often surprise its own creator by developing strategies that the programmer himself would never have considered*. While emergent behavior isn’t necessarily more efficient, it’s something that can be used to deal with tasks that even the programmer doesn’t have a good understanding of.

*Remember how Jeff06 was amazed to see his GA making the two pods of Coders Strike Back collide like in a game of pool?

Training Data Distribution

Assuming you do know what’s best for your AI and what training data it should use, the final important attribute is creating a training experience that can accurately represent the environment where the performance will be measured.

A very common sentence you may read in the chat during contests is, “I beat the #1 player 60% of the time!” from someone who is a couple hundred ranks below. Training and tweaking an AI with data that is distributed much differently and doesn’t represent the final system will lead to the most frustrating problems, such as code improvements that result in you dropping ranks, or achieving high win rates against top-ranked players, but never getting to play against them.

Training against extremely good bots can also result in your AI failing to capitalize on basic mistakes (since those don’t appear in the training) or become extremely biased against a specific strategy, and thus weak against the most common ones. The same danger arises with systems that train against themselves. If your new bot beats your old one 60% of the time, that’s not necessarily going to get reflected on your leaderboard ranking.

Mastery of one distribution will not necessarily lead to a strong performance over other distributions. The crucial assumption, when it comes to training, is that your distribution of training examples is identical to the distribution of test examples, even if the assumption has to be violated in practice.

From making an offline simulation to scraping CodinGame leaderboards for replays, deciding how you are going to provide the training data and feedback is the first important step towards a successful AI. Then, you can start working on the core of your AI, namely the:

Target Function

Design

The target function describes what your AI will actually be learning. Starting from a complex target function, the first step is to shrink it down as much as possible to simplify the learning process. Let’s take Code of Kutulu, for example:

The most comprehensive target function we can think of is simply win();. Given a game of Code of Kutulu, the function should win it (if possible)! In this particular case – winning means making a series of valid moves that are the best in every situation.

From this definition, we can shrink our target function into something a lot more realistic, like makeBestMove();. Assuming the function does indeed make the best possible move in every single turn, it should work identically to our previous “win” function. The best move should put you into the best possible situation relative to all other moves available to you. It means that we need to be able to evaluate every single move available to us.

A function like evaluateMove(move); would allow sorting the moves by how good they are, and picking the best one – just like the previous function did. Going deeper, a valid way to evaluate a move would be to simulate the state that would result from performing the move, and evaluate the board state instead.

With that in mind, we can see that we went from something very abstract like win(); to something quite tangible, like evaluateBoardState(state);. Best of all, in a perfect world, both of these functions lead to identical outcomes!

Representation

For the representation of a target function, the possibilities are endless – from a large lookup table with hardcoded scores for every existing board state in the game to an artificial neural network solely to evaluate a state.

Generally speaking, the choice of representation involves a crucial trade-off – expressive representations let us represent a close to ideal target function that will be capable of differentiating between even the smallest differences. However, a lot more training data will be required just to break even with a simpler representation.

For the sake of simplicity, let’s make an example with a simple representation of a board state in Code of Kutulu, using only 4 features:

- n1 the sanity,

- n2 the distance to the closest explorer,

- n3 the distance to the closest wanderer,

- n4 the amount of slashers in our line of sight.

Without any prior knowledge about the importance of each of these features, you now have enough information to complete the program. The importance of each and every feature should be figured out by the learning program itself. We can accomplish this by assigning weights for each feature and optimizing them:

w0 + w1*n1 + w2*n2 + w3*n3 + w4*n4

Approximation

Now that we’ve gone from abstract functions to a simple linear function, our job is as simple as tweaking the coefficients and assessing the effects of the change.

When working with direct training, a simple least mean squares algorithm will tweak the coefficients to minimize the difference between the score calculated by your AI and the perfectly accurate score that was provided by your experiment generator (or brute forced offline).

However, when working with indirect training, the winrate of the program will be the only indicator. To help with evaluating different intermediate board states, one could also use the score of a successor state to evaluate a current state. While it may sound strange that evaluating a successor state will improve the evaluation function itself, it has been proven that such iterative estimating based off of successor states converges towards a function with perfect weights.

Optimization

If you did everything correctly, then you have a working program, worthy of being called Artificial Intelligence!…but you’re still not rank 1 after the 1st submit, are you? This is where optimizing and nesting AIs comes into play. Say you’ve picked your algorithm for your next contest, and upon finishing it you get into the top 100; however, your competitive drive won’t let you sleep with those rookie ranks…so where do you go from there?

You could tweak things yourself:

- Use your domain knowledge to create new features to help your AI manually. In other words – improve your AI core based on your knowledge about the task.

- Modify the data you’re working with to better match the reality.

- Modify the representation function – find out what features should have been considered to avoid getting into unfavorable situations.

Or you could nest your bot with another machine-learning algorithm and let an AI improve your AI:

- Use metaheuristics (like GA) to obtain optimal coefficients for your target function.

- Replace your target function with an artificial neural network and train it instead.

- Tune your hyperparameters with extensive testing to find the combination that works the best.

There are always things to improve and the number of options can be discouraging. Your 6th sense for finding the bottleneck where your bot is hiding comes with experience – just knowing how to improve something in theory is not enough.

Are you already anticipating the next contest? Be sure to register so you don’t miss your chance to prove your skill!

This blog post was inspired and based off of the work from Tom M. Mitchell: “Machine Learning.” It’s a great book for everyone interested in the field – serving as an introduction to various approaches to machine learning, providing exercises for those who are looking for a challenge and providing in-depth explanations about various algorithms like neural networks, Bayesian learning, genetic algorithms reinforcement learning and many more.